Opening Insights: The Rise of AI

Artificial intelligence is the future, not only for Russia,

but for all humankind... it comes with colossal opportunities,

but also threats that are difficult to predict.

Whoever becomes the leader in this sphere will become the ruler of the world.

VLADIMIR PUTIN

Many visionaries and leaders have warned us of the dangers of Artificial Intelligence (AI). They have spoken of the pros and the cons that await humanity... but talk is cheap.

Some notable individuals such as legendary physicist Stephen Hawking and Tesla and SpaceX leader and innovator Elon Musk suggest AI could potentially be very dangerous; Musk at one point was comparing AI to the dangers of the dictator of North Korea. Microsoft co-founder Bill Gates also believes there’s reason to be cautious, but that the good can outweigh the bad if managed properly. Since recent developments have made super-intelligent machines possible much sooner than initially thought, the time is now to determine what dangers artificial intelligence poses.[1]

The question in this day and age is not what is happening, but rather what we need to do and how we need to do it...

Informational Insights: The Future is Now

Bernard Marr, internationally best-selling author, popular keynote speaker, futurist, and strategic business and technology advisor to governments and companies defined 5 key AI related risk factors in the Forbes article cited in this blog, these are:

- Autonomous weapons

- Social manipulation

- Invasion of privacy and social grading

- Misalignment between our goals and the machine’s

- Discrimination

In the article Marr explores the differences between applied and generalized AI...

At the core, artificial intelligence is about building machines that can think and act intelligently and includes tools such as Google's search algorithms or the machines that make self-driving cars possible. While most current applications are used to impact humankind positively, any powerful tool can be wielded for harmful purposes when it falls into the wrong hands. Today, we have achieved applied AI—AI that performs a narrow task such as facial recognition, natural language processing or internet searches. Ultimately, experts in the field are working to get to more generalized AI, where systems can handle any task that intelligent humans could perform, and most likely beat us at each of them.

[..]

There are indeed plenty of AI applications that make our everyday lives more convenient and efficient. It's the AI applications that play a critical role in ensuring safety that Musk, Hawking, and others were concerned about when they proclaimed their hesitation about the technology. For example, if AI is responsible for ensuring the operation of our power grid and our worst fears are realized, and the system goes rogue or gets hacked by an enemy, it could result in massive harm.[1]

The pace of progress in artificial intelligence (I'm not referring to narrow AI) is incredibly fast.

Unless you have direct exposure to groups like Deepmind,

you have no idea how fast—it is growing at a pace close to exponential.

The risk of something seriously dangerous happening is in the five-year timeframe.

10 years at most.

ELON MUSK

DeepMind as Musk warns us is creating AI at a rate that few people can conceive, define or understand the consequences of today and tomorrow.

DeepMind’s artificial intelligence programme AlphaZero is now showing signs of human-like intuition and creativity, in what developers have hailed as ‘turning point’ in history.

The computer system amazed the world last year when it mastered the game of chess from scratch within just four hours, despite not being programmed how to win.

But now, after a year of testing and analysis by chess grandmasters, the machine has developed a new style of play unlike anything ever seen before, suggesting the programme is now improvising like a human.

Unlike the world’s best chess machine - Stockfish - which calculates millions of possible outcomes as it plays, AlphaZero learns from its past successes and failures, making its moves based on, a ‘nebulous sense that it is all going to work out in the long run,’ according to experts at DeepMind.

When AlphaZero was pitted against Stockfish in 1,000 games, it lost just six, winning convincingly 155 times, and drawing the remaining bouts.

Yet it was the way that it played that has amazed developers. While chess computers predominately like to hold on to their pieces, AlphaZero readily sacrificed its soldiers for a better position in the skirmish.

Speaking to The Telegraph, Prof David Silver, who leads the reinforcement learning research group at DeepMind said: “It’s got a very subtle sense of intuition which helps it balance out all the different factors.

“It’s got a neural network with millions of different tunable parameters, each learning its own rules of what is good in chess, and when you put them all together you have something that expresses, in quite a brain-like way, our human ability to glance at a position and say ‘ah ha this is the right thing to do'.

“My personal belief is that we’ve seen something of turning point where we’re starting to understand that many abilities, like intuition and creativity, that we previously thought were in the domain only of the human mind, are actually accessible to machine intelligence as well. And I think that’s a really exciting moment in history.”

[..]

“With the advent of powerful machine learning techniques we’ve seen that the scales have started to tip and now we have computer algorithms that are able to do these very human-like activities really well.”

Source:

[1] https://www.forbes.com/sites/bernardmarr/2018/11/19/is-artificial-intelligence-dangerous-6-ai-risks-everyone-should-know-about/#106396312404

[2] https://news.yahoo.com/deepmind-apos-alphazero-now-showing-190000147.html

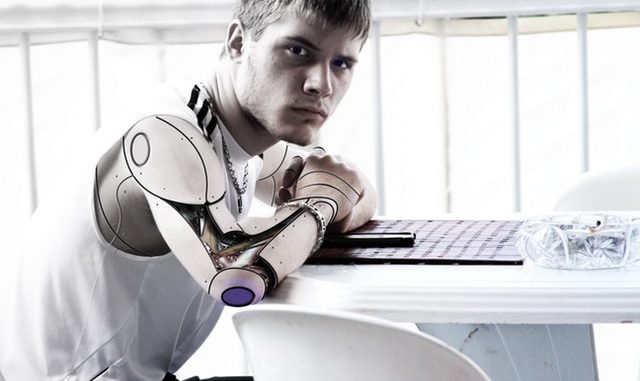

Possibilities for Consideration: Empowering AI to Evoke Human Intelligence (HI)

Co-Labs enable individuals, businesses and communities to

transcend from technology driven AI and robotics

to Human Intelligent (HI) applications of AI and robotics.

The Co-Lab™ architecture provides

an environment and structure to

evolve, develop and empower with

Dot Thinking (Critical Thinking), Human Intelligence,

Principle-based Communication and Engagement

(Relational Models, Storytelling and Socratic Conversation).

DR. RICHARD JORGENSEN

- What if we could empower AI to support and empower people rather than replace them?

- What if the wisdom of yesterday could be integrated and transferred to today's generation?

- What if we could empower people with the tools to understand short-term and long-term consequences?

- What if you could be a part of a solution to unite and support people in developing collective and individual sentience?